👋 Hey, Nikki here! Welcome to this month’s ✨ free article ✨ of User Research Academy. Each week I tackle reader questions about the ins and outs of user research through my podcast, and every two weeks, I share an article with super concrete tips and examples on user research methods, approaches, careers, or situations.

If you want to see everything I post, subscribe below!

Enjoying the content? Consider leaving me a testimonial to help me share the love 🩵

Tying the impact of research on metrics can be challenging. I struggled with it for a large part of my career. How did I take a 1x1 interview and show how it impacted our revenue? Often, it felt like a lofty goal that I would never accomplish, and for a while, I left it to the wayside. Metrics were for other roles, not user research.

While that attitude served me for a little, there was a bumpier road ahead that I couldn’t see. After a round of layoffs and struggling to find a new role, I finally accepted a job offer.

However, I quickly realized how much of an uphill battle my role would be because no one, besides the person who hired me, believed in user research. I didn’t know people could self-select into believing or not believing in a literal craft, but here we were. I was terrified of losing another job and being unable to pay rent, so I tried to embrace the challenge.

The number one question I continuously got bombarded with was, “What is the impact of user research?”

I had the jaws of a fish as I opened and closed my mouth, unable to create a concrete answer. Eventually, I defaulted to the only things I knew to say:

“We understand our customers better so we can make customer-centric decisions”

“If we understand people’s needs or pain points, we create relevant products for them.”

“We can reduce time guessing or basing ideas off assumptions that might fail.”

Although I had witnessed user research do many amazing things, I couldn’t fully articulate the impact in a way my colleagues understood or cared about. This plagued me. I constantly felt defeated and unvalued because I didn’t know how to tie something like qualitative research back to what people cared about: money.

And for a long time, I “stood my ground,” which really meant that I was hugely antagonistic and stubborn when it came to incorporating business into user research. I used to say that I, as a user researcher, was not there for the business and didn’t care about the business. I was there for the user. And usually, in my mind, the business and the user were pitted against each other as villain and victim.

To get to the point, this did not work out for me.

By creating a mindset and environment where it was me + users versus the business, I was stuck in the middle of a complete mess. I lacked trust with my colleagues, got into fights (literally, I yelled at people 🙈), and spun on the hamster wheel of trying to prove the value of user research without business.

Fast forward a few months when I sat in a performance review. My craft, interviewing, usability testing, synthesizing, was spot-on. I was good, if not great, at conducting user research. But I had a huge glaring gap in skills like stakeholder management, tying research to the business, and workshop facilitation.

I was super bummed about that performance review and, unfortunately, didn’t have much guidance on how to make it better. Was I going to lose my job over it? Likely not. However, I quickly saw there was no way I was going to advance in my career if I didn’t figure something out. And I knew exactly what I needed to work on.

Formulating a plan

It took me a few weeks to do some research and formulate a plan for how I was going to tackle this issue.

The first thing I did was research “business.” It was tough because it was such a broad area and scope. I wasn’t super familiar with how businesses operate, what goals they had, or what metrics were important to them, so it took a lot of Googling and some embarrassing question-asking.

I learned how important revenue was to a company — it shouldn’t have been such an “ah-ha” moment, but in my need to be so user-centric, I lost complete vision of the holistic picture.

A company (usually) needs to make money to create a product/service. Customers want a product/service that helps them achieve their goals or alleviate a pain point. When they find that experience, they give the company money.

A product/service aligned with customers’ needs = more money for a company.

User research could help determine the experiences, needs, and pain points of users to increase the amount of money a company was making.

Finally, I started to wrap my head around this concept, but I still wasn't sure how to apply it because that sounded like one of the fishy and vague answers I gave about user research impact. I wanted something more concrete.

Stakeholder interviews

I was in the green with some of my stakeholders, and definitely not a fan favorite with others (remember those fights I spoke about?), so this part was very challenging for me at first. But I knew I needed to talk to my stakeholders to learn more about their goals and the business. Without this, how was I going to draw concrete ties between user research and impact?

Biting my tongue, I bought a lot of “I’m sorry, please talk to me” lunches and coffees. I started the conversation with sharing that I was sorry about any disagreements made and sharing why I had acted in that way. Then I spoke about what and how I was trying to change. Most of my stakeholders were super kind and understanding, and they also apologized back. For a few, we were never really able to repair the relationship, but, hey, 80/20 rule, right?

As I spoke to these stakeholders, I learned about goals and started to see patterns and trends evolve in what they were talking about. There was a lot of concern or goals around the same terminology. With this, I went back to Google to further investigate.

Uncovering the pirate metrics

Through my research, I stumbled on something called the pirate metrics, named aptly for the acronym: AARRR.

This model, coined by Dave McClure* in 2007, highlights the five most critical metrics for businesses to track for success. Not only did these make sense to me based on my desk research, but they were also commonly referred to in my stakeholder interviews.

I wanted to understand how to concretely tie user research to these hugely important metrics. With that, I would no longer feel as much like a fish out of water. Instead, I could start to answer the questions about my impact more confidently and know that I was helping the business and users.

*Dave McClure is not a stunning human, having been accused of sexual harassment. I’m not a fan of him but wanted to cite the original source of the pirate metrics. While he sucks, hopefully, we can leverage this model for the greater good.

Tying user research to the metrics

Off I went on this adventure that would change my career forever. I dedicated as much time as possible to understanding these pirate metrics and figuring out how I could tie user research projects to each of them.

This is everything I learned and still practice to this day for each of the pirate metrics. I’ll be using a specific example from a travel company I used to work for.

Acquisition - how people find and are introduced to your product/service

Acquisition is all about getting new customers into your product/service so that they, essentially, know it exists. There are many ways a company can do this, such as:

SEO

Marketing (including email and social media)

Sales

Paid advertising

When I was working at the travel company, acquisition was hugely important to us because we didn’t have complete market share and, instead, shared the space with quite a few competitors. Getting customers without a ridiculously high customer acquisition cost (CAC) was incredibly important in our revenue.

We struggled a lot with finding new customers because of the sheer amount of competition out there and also because of the trust (or lack thereof) that comes with using a third-party ticketing product.

However, I knew how important it was to think about help improve our acquisition so I met with the acquisition product manager and we brainstormed the most important metrics within the acquisition space:

Increasing traffic to our main page

Reducing bounce rate from our main page (without any clicks)

Increasing time spent on page

Understanding the breakdown of where our traffic is coming from

These are all relatively high-level, top of funnel (TOFU) metrics and, to me, they seemed quite broad and generic, but it was the best we could do. (PS - these things all take time to learn and I’m still learning more about metrics/business so always take the time to experiment).

With that, I started to identify some research projects I could do to help these TOFU metrics (I love calling something in product Tofu). With that, I came up with the following projects:

Content testing through a highlighter test. The reason I decided on a highlighter test was because it is a great method to help determine value proposition and what information is necessary to help users achieve their goals. It can also reduce the amount of text on a page to focus on what is essential to the user. During this test, I copied and pasted the text from our homepage into a google doc and I asked them to use three colors:

Green = text that was helpful to them

Organge = text that was confusing

Red = text that was unnecessary

After they highlighted, we went through the content and I asked them follow-up questions on why they highlighted certain things in the specific colors, how they might reword confusing content, and also if there was content missing that might be helpful.

We then went on to test A/B versions of copy and content to see what was most effective. This was to help reduce bounce rate and increase time spent on page.

Five-second tests. Once we updated our copy, I wanted to use five-second tests to understand what message we were communicating to our users. Could people understand we were a ticketing platform? Did they understand what we were able to give them or what needs we were trying to meet? We used this to continue to refine our message and clarify how we could help users achieve their goals from the moment they lied eyes on our platform. This was also to reduce bounce rate and increase time spent on page.

Closed word choice survey. Finally, we wanted to understand the types of words people associated with us, namely around the “trust family” since trust was a huge object of concern with us being a third-party ticket platform. I set up a survey with several images of our website and sent it out to participants asking them to select all the words they would use to describe our platform. I used words like:

Empowering

Approachable

Disconnected

Friendly

Irrelevant

Patronizing

Untrustworthy

Trustworthy

Skeptical

Easy

Relevant

Simple

I also followed-up with some open-ended questions, such as “please describe why you chose those words” to try to get further insight. We ended up having to contact some respondents to get a better insight into why they chose certain words through quick interviews. This was also to reduce bounce rate.

Lastly, I did some work with the four forces diagram in Jobs to be Done. This looks at why people stay with or switch between products when it comes to their habits, anxieties, pushes, and pulls. I conducted about 15 interviews with non-users of competitors to understand a bit about why they used several different competitors and why they switched between them. It was super interesting to learn about people’s anxieties and habits when it came to travel and this helped us with creating some great messaging to help foster trust and make people look at us as a reliable platform. This helped a lot with reducing bounce rate.

Activation - how people begin to use your product/service

It’s all good if people find you, but that first interaction is key. After running my business for almost two years full-time now, I know how important it is to activate users and get them to take that first step with you.

There are many ways to activate users and it hugely depends on your product/service/organization, but when you think about activation, think about the primary conversion metrics that determine the success of certain channels and campaigns, rather than high-level or micro-conversions. This could look like a funnel:

Someone comes to your website

They see a value in your work

They try a free trial or book a demo or sign up for a newsletter

Activation is the beginning of your relationship with the customer. Before this, they’re anonymously researching your business and competitors before they take any specific action that allows you to begin directly engaging with them.

When it came to the travel company I was working at, we actually didn’t have too many activation channels besides “booking a ticket,” which is a conversion but there were many steps prior to that conversion. So, I sat down with the product manager and we thought through some activation metrics:

Increase number of newsletter signups

Increase number of trip searches

Increase first time ticket purchases

This was tough for me to apply user research on because I wasn’t sure exactly how to impact activation without going into full-blown conversion rate mode. I also wanted to test some of the other metrics outside of purchasing a first ticket like just searching or signing up for our newsletter.

So I came up with a few project ideas to try to help more these metrics:

Walk-the-store interviews. I spoke to about 15 users who hadn’t yet purchased with us yet to understand how they felt when they landed on our page and when they were searching for a trip. In this interview, they shared their screen and took me through their reactions and perceptions of what they say and what they were doing. It was very much qualitative and gave us some great feedback on confusing elements and components, as well as some glaring mistakes in the experience. This was to help searching for a trip.

1x1 interviews with first-time purchasers. I then wanted to dive into the first-time purchasing experience with users so I screened for people who had recently purchased their first ticket with us. The reason behind this (and me not doing a usability test at this phase) was to understand the qualitative side of their experience. How had it been for them? What had been confusing? What had been missing? Another 15 people walked us step-by-step through their first experience which gave rise to even more pain points and improvements we could make in the experience. This study hugely helped with increasing people who purchased their first ticket since we could streamline the experience for them.

I struggled quite a lot with newsletter sign ups and ended up with continuously surveying our audience to understand why they signed up for our newsletter. I knew it would be tough to understand why people didn’t so I decided to instead, tap on what we had and could easily find out. Through understanding why people signed up and the value they got from it, we were better able to articulate that in our content. We also had an unsubscribe survey to understand why people unsubscribed from us to get improvements from that side. There were fewer responses to the unsubscribe survey but we were still able to take some actions from it. This hugely helped with increasing newsletter sign ups.

Retention - how people come back to and continue to use your product/service

Ah, the bread and butter. We get people, but nothing is better than keeping people (as serial killer-ish as that sounds). Retention is king/queen/royalty because when people purchase multiple times from you, their value to you as an organization sky rockets.

There are many ways to measure retention like:

Customer lifetime value

Churn rate

Returning to your website

Opening emails repeatedly

Checking your product repeatedly in a given timeframe

Now, I’m just going to say it: churn is a tricky subject, which I will cover in its own full article because churn research gives me the heebie-jeebies 😱.

Retention was another big hitter for us since our CAC was so high. We were getting customers, but we weren’t retaining them, which was not helping grow our revenue. So we really focused on the following metrics:

Increasing repeat order rate and customer lifetime value

Increasing repeat searches

Increasing the number of accounts made

Retention research is the most fun for me, so I really leaned into these projects and ran:

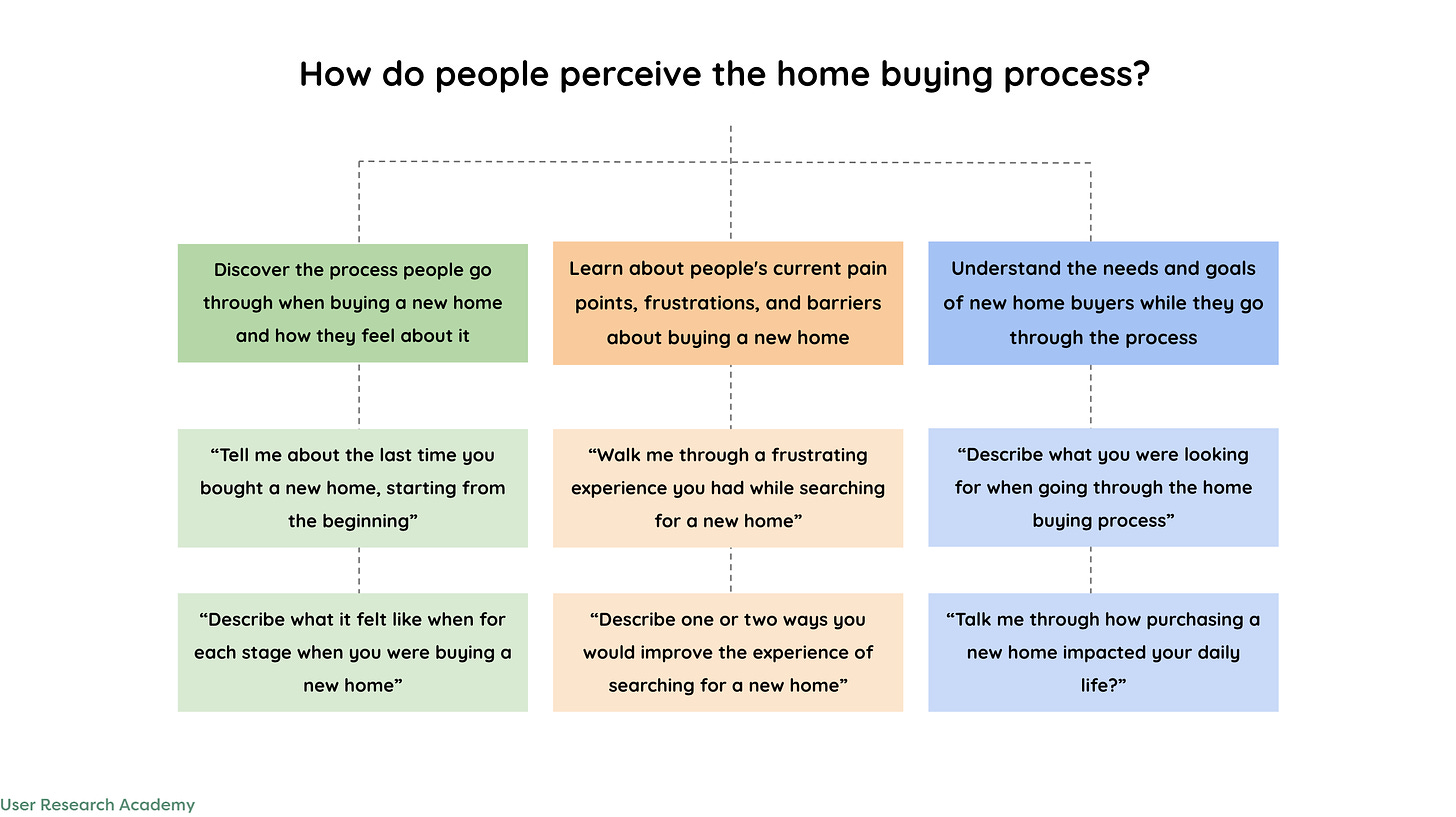

1x1 generative research interviews. Retention is all about creating a product that helps people achieve their goals more effectively and efficiently than competitors while alleviating any pain points to achieve the goal. To me, generative research interviews are a slam dunk in getting that information. I took these interviews away from the product and into the complexities of planning and trip from end-to-end, including their needs, goals, and pain points. I used the TEDW framework to ensure open-ended questions:

“Tell me…” or “Talk me through…”

“Explain….”

“Describe….”

“Walk me through….”

This study led to some key understandings of what our customers needed and led to an understanding of where we were failing to meet those needs and alleviate those pain points. We were able to pivot and change the product in ways to better align with users through things like easier price comparison, eco-friendliness of trips, and sharing trips with friends. This led to increasing repeat order rate and customer lifetime value.

Usability testing (quantitative and qualitative). I first started with some qualitative usability testing, going through the process of booking a trip and asking people about their experience as they went through it. On its own, this study led to huge learnings on how we could improve the experience of our product through clunky filters, inability to link to trips, no favoriting, etc. Once the qualitative side was done and we made improvements, I went on to benchmark the current experience using task-based usability test and measuring time on task and task success, as well as the Single Ease Questionnaire and System Usability Scale to gather more data on usability and satisfaction. We saw people struggle with basic tasks and were able to make critical fixes that helped increase the usability of our platform, which directly contributed to increasing repeat searches as well as increasing repeat order rate and customer lifetime value.

Accounts were really difficult to understand because, well, to be honest, we didn’t really have any value in our accounts. You didn’t need one for anything other than storing your data to use more easily again (ex: storing credit card information). I deprioritized this and, unfortunately, wasn’t able to get to it before I left the company. If I could have, I would have probably run some surveys to understand the current value of the account (if there was one), and also maybe running interviews with people using competitor accounts to understand the value they were getting from those.

Referral - how people share your product/service with others (positive and negative sentiments)

Referral is all about people spreading the word about your product/service with other people - either with a positive or negative sentiment. Referrals can be great because, if positive, they can almost feel like free customers.

Referral metrics can look like this:

Engaging with referral campaigns or emails

Using a referral bonus or program within your program/service

Leaving reviews

When we sat down to talk about referrals, we had some metrics we wanted to start tracking and thinking about:

Increasing sign-ups through a bonus referral program (that we hadn’t yet created)

Increasing our review rating

Increasing shared trips

Referrals were a very interesting area for me and one that I wish I had invested more time into, but we had a lot of other priorities at the time. I didn’t have the bandwidth to dive in and learn how to conduct referral-related research as much as I wanted. However, I was able to sneak in some research when it came to referrals:

Data triangulation. We had quite a few reviews on our apps, and I decided it might be interesting (and fun, in a sadistic way) to go through these reviews and sort the data. I did this in a very manual way, combing through the qualitative reviews and creating a miro board with categories and tags. I wish I had a photo for you but I didn’t manage to snag one, but the categories consisted of:

Pain points/problems people were coming up against and complaining about

Feature requests which I needed to dig into more deeply in future research because they were quite shallow

Positives of what we were doing well that we could use more of

Within these major categories, I found patterns and trends and used the amount of reviews/mentions to help me weigh them for prioritization. For instance, a huge pain point people encountered in our system was a delayed email confirmation that ended up freaking people out that they had bought a ticket from a bad third-party app. We were able to fix this relatively quickly and easily. This project directly impacting our metric of increasing our review rating.

1x1 interviews and concept testing. Since one of our main metrics was about a referral program we hadn’t yet created, I had to start from scratch on this project, which was quite exciting. I held about 15 1x1 interviews about referral programs where I asked really broad questions on people’s previous experiences with referral programs both inside and outside of the travel sphere (previous experiences with products, even if they are outside of your industry, are much more reliable than asking future-based questions). We were able to understand some key pain points and the needs of our customers. With this, we built a concept of a referral program, which I then used to run a concept test with 12 more people. We evolved the concept with this feedback and eventually shipped the feature, which we then were able to finally apply our metric to increasing sign-ups through referrals

Usability testing. We had heard from previous research done in one of our retention projects that sharing trips easily with others was an important feature for our users. We thought that increasing trip shares (especially with people that didn’t have the app or an account) would be an interesting metric to track because it may boost people’s motivation to use us. We conducted a usability test on the sharing trips functionality and then monitored its usage. We found, interestingly, that when users shared trips with others, they also shared their referral code, which helped us increase sharing trips and sign-ups through referrals simultaneously.

Revenue - how people are generative revenue (against costs) for your product/service

Now, all of the above stack up into and impact revenue. If you aren’t getting new customers and retaining them, likely you aren’t making money. Revenue can be broken down into so many different ways, such as:

Revenue that exceeds CAC

Monthly recurring revenue

Minimum revenue

Breakeven revenue

There was no one project that I could attribute to in terms of revenue, but rather it was the accumulation of the multiple projects I did with this new intention of directly impacting business as much as I could.

Because I was able to impact the metrics we determined above, I could indirectly link my work back to any revenue shifts that we saw in the business.

Try it!

Through this experience, the entire trajectory of my career shifted in such a positive way. I started thinking about projects not just with the user’s goals in mind but also with the business too. It hugely accelerated my career to tie these knots together and show the value of user research through this lens, which stakeholders greatly appreciated.

I recommend starting with one of these letters/topics and going to a trusted colleague to talk through metrics and potential user research projects that could impact the metrics. This is hugely a brainstorming session at first, especially if you are new to metrics + UXR, so a trusted colleague who is open to talking through potential ideas and experimenting is key.

If you don’t have any colleagues open to this discussion, try to brainstorm on your own (this is something I did for a while before I found trusted colleagues) and talk to other peers or join a community - you can check out my user research membership - to get feedback and ideas.

But, the first thing is to start with one of the letters and go from there in trying - you might not get it right the first time (I certainly didn’t), but it is a great skill to practice and hone over time!