How to run a quantitative usability test

And use it to continuously prove your impact

👋 Hey, Nikki here! Welcome to this month’s ✨ free article ✨ of User Research Academy. Three times a month, I share an article with super concrete tips and examples on user research methods, approaches, careers, or situations.

If you want to see everything I post, subscribe below!

“But it gives us numbers.”

“No thanks.”

That’s the first thing I said when faced with the suggestion of conducting a quantitative usability test.

I was more than happy with my conversation-filled qualitative usability tests. Asking people about their immediate reactions, how they perceived the screens, and their general thoughts on what was confusing and missing. It was a routine I quickly felt comfortable in and enjoyed.

But there’s always a but, isn’t there?

It came when I was sitting with my team, and we were chatting about a flow that many of our users were having trouble with. I had triangulated some data from previous research where the topic had come up, customer support tickets, and also from account managers.

I told my team that we had enough to understand most of the pain points, especially the important ones, and make changes. I was at a point in my career and at the organization where I was privileged enough to be able to say no to user research requests.

Luckily (at the time, it felt unfortunate, but for my career, it was a good thing), my manager was sitting in the meeting. He’s one of the most fantastic managers I’ve ever had (Hi, John 👋🏻), and he asked me a smoldering question:

“How will we know if the changes we make improve the usability?”

Of course, John already knew the answer to his question, but he directed it to me. I knew what he was going for, but I was terrified of the answer.

All I wanted to do was make the changes and then ask the users if the changes we made were helpful — I was even willing to sift through the customer support tickets for the next few months to see if complaints decreased. Anything to stay away from the numbers.

But my team was beaming. This was exactly what they wanted: a clear and straightforward way to measure usability and progress. There was no backing out. It was finally time for me to conduct quantitative usability tests.

And I am so glad for that push because they have become an absolutely essential part of my user research toolkit and have also helped me become a well-rounded (and promoted!) user researcher.

What is quantitative usability testing?

Usability tests, on a whole, are about having participants attempt to do the most common and important tasks on a product/service. While you conduct the test, you, as a researcher, are looking to find problems the participant runs into during the test. You then take these problems to your team and, together, brainstorm and find ways to fix the usability issues — which are sometimes simple and other times complex.

With qualitative usability tests, you are talking to the participants and describing the different reactions, perceptions, or issues they encounter.

However, with a quantitative usability test, you can still describe the problem, but you measure:

How many people encountered a problem

How many people were able to complete the tasks

The time it took them to complete tasks

How many errors participants ran into

What types of errors participants encountered

Participants’ perceptions of usability

With quantitative usability testing, you can find out a lot of important information that can help you generate the impact of your research. For instance, when I was working at a travel company, we conducted a quantitative usability test on our checkout flow.

We found that people were taking a long time to fill out information that ultimately wasn’t that relevant and, thus, dropping off and abandoning the flow for a competitor that was easier to use.

Based on these results, we made some significant changes and retested the flow after the improvements were made. We reduced the time it took to fill out information by 50% (which was faster than people could do on the competitive product as well), and we reduced abandonment by 35%. This meant that we increased revenue by £75,000 annually.

Big impact.

When it comes to measuring usability, we can break that down into three major areas:

Effectiveness: Whether a user can accurately complete tasks and an overarching goal

Efficiency: How much effort and time it takes for the user to complete tasks and an overarching goal accurately

Satisfaction: How comfortable and satisfied a user is with completing the tasks and goal

Each of these areas owns metrics you can use to quantify them.

Effectiveness

Task Success: This simple metric tells you if a user could complete a given task (0=Fail, 1=Pass). You can get fancier with this by assigning more numbers that denote the difficulty users had with the task, but you need to determine the levels with your team before the study.

The number of errors: This task gives you the number of errors a user committed while trying to complete a task. You can also gain insight into common mistakes users run into while attempting to complete the task. If any of your users seem to want to complete a task differently, a common trend of errors may occur.

Single Ease Question (SEQ): The SEQ is one question (on a seven-point scale) measuring the participant's perceived task ease. Ask the SEQ after each completed (or failed) task.

Confidence: Confidence is a seven-point scale that asks users to rate how confident they were that they completed the task successfully.

Efficiency

Time on Task: This metric measures how long it takes participants to complete or fail a given task. This metric can give you a few different options to report on, where you can provide the data on average task completion time, average task failure time, or overall average task time (of both completed and failed tasks).

Number of errors. With this metric, you count the number of errors participants encounter while trying to complete a given task. After the test, you can also categorize the types of errors participants encountered to understand if there are trends and patterns across the errors.

Subjective Mental Effort Question (SMEQ): The SMEQ allows the users to rate how mentally tricky a task was to complete.

Satisfaction

System Usability Scale (SUS): The SUS has become an industry standard and measures the perceived usability of user experience. Because of its popularity, you can reference published statistics (for example, the average SUS score is 68).

Usability Metric for User Experience (UMUX/UMUX-Lite). The UMUX has slightly taken over the SUS as an industry standard as it is shorter and easier to administer. It also measures the perception of usability.

Now, there are a lot of metrics here, and I don’t recommend measuring them all during one test, especially if you are just starting out and doubly if you are running moderated tests. I learned the hard way, trying to juggle a stopwatch, understanding if someone was successfully completing a task, and counting the number of errors. It was a mess.

But before we dive into how to run a quantitative usability test, let’s talk through when they are useful.

When to run a quantitative usability test

There is a time and a place for everything, including quantitative usability testing. How do you know when it’s appropriate to run a quantitative usability test, especially rather than a qualitative one?

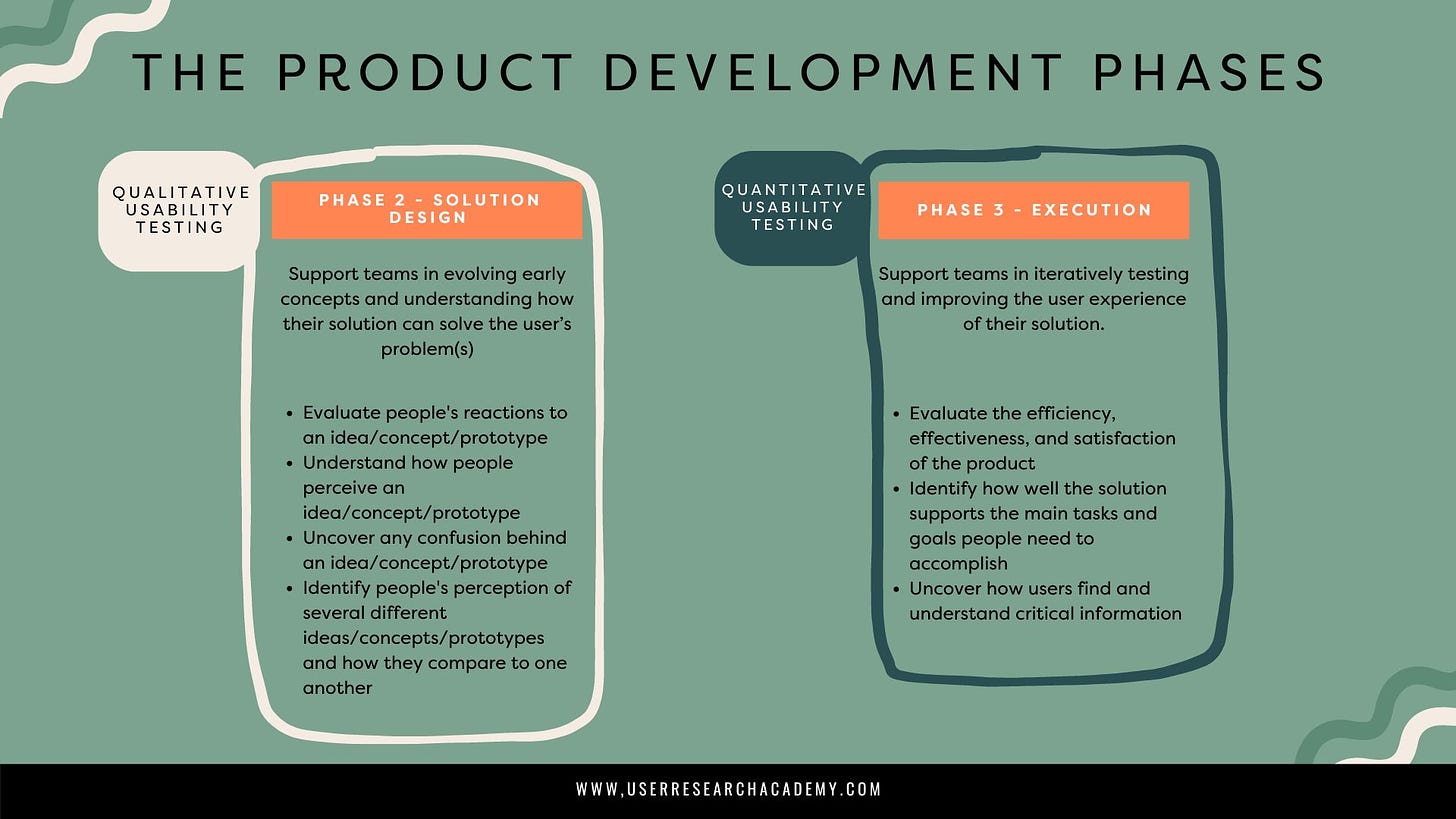

Quantitative and qualitative usability tests have different goals and are appropriate for different parts of the product development process.

Qualitative usability tests are much more about exploring people’s feelings, reactions, and perceptions of an idea/prototype. The goals of this type of study are to explore and get early feedback, and the ideas are usually less defined.

On the other side, quantitative usability testing is about evaluating the efficiency, effectiveness, and satisfaction of the product/service. You can absolutely ask questions about perceptions, but it is usually at the end of the study after the measurements are complete.

If you already have a solid idea of the solution and you are ready to measure the actual usability, quantitative usability testing is the perfect approach.

How to run a quantitative usability test

Now that we’re clear on what quantitative usability testing is and when to use it, it’s time to go through how actually to run one of these fantastic tests.

We’re going to go through a step-by-step guide with an example of how to run a quantitative usability test effectively and efficiently (meta, I know 😂).

Create a plan

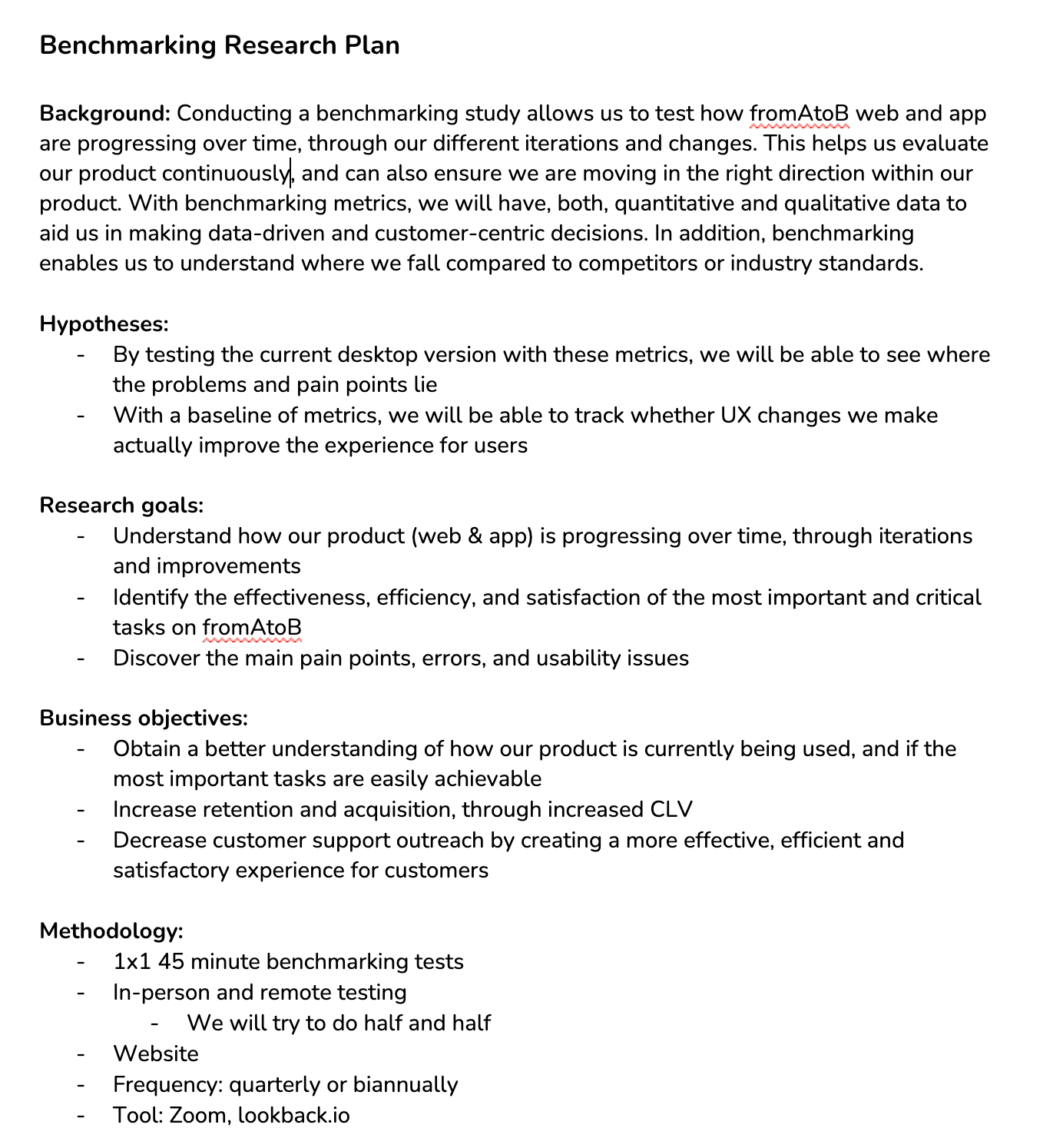

The most important artifact to create at the beginning of any research study is a research plan. This plan is critical because it enables you and your team to align exactly on what you’re trying to achieve in the research project and how. It also helps clarify the project and lets you focus as a researcher.

Within the plan, you will explore the goals of the research study. This is where the above component about when to conduct a quantitative usability test becomes important.

The outcome of this type of study is clear measurements of the usability of a product/service. In this, your goals are to:

Understand how a product/service is progressing over time through iterations and improvements

Identify the effectiveness, efficiency, and satisfaction of the most important and critical tasks on the product/service

Discover the main pain points, errors, and usability issues of the product/service

Here is the beginning of a research plan from a quantitative usability study I did while at a b2c travel company.

(In this study, I reference benchmarking, which I will cover at the end of this article)

Ensure the fidelity makes sense for quantitative

One of the biggest mistakes I made was attempting to conduct a quantitative usability test on a prototype. Why was this a mistake?

When you think about the point of quantitative usability testing, it is about really assessing efficiency and effectiveness. Now, with the prototype I was testing (and many prototypes out there), we generally design a happy and clear path. There aren’t as many distractions as possible with a real product. There isn’t as much of an opportunity to get lost.

So, if we are using a prototype to test usability, there might only be one path for our participants to go down in this testing scenario. Whereas, once the product is live, there are multiple paths our participants could get lost on.

What happened when I tested the prototype? We had high task success and a relatively good time on task. The problem was the prototype was unrealistic, and when we tested again on the live product (with all the bells, whistles, and distractions), our task success fell, and our time on task rose.

I highly recommend, if you want to do a quantitative usability test, to do it on either an already live product or one that is as close to what it will be like live as possible and use the rough prototype phase to get qualitative feedback.

Choose your metrics

The metrics you choose will differ depending on one main factor:

Moderated versus unmoderated testing

If you are running a moderated study and you don’t have anyone there to help support you, I would recommend only tracking:

Time on task

Task success

The SUS or UMUX

I recommend this because it is extremely challenging to keep track of this all at once alone. As I mentioned, I have tried to juggle tracking way too many metrics and, at the end, I lacked confidence in my data. I wasn’t sure if I could trust how many errors I counted or if I started a stopwatch at the right time.

If you have someone else (or multiple people) helping you during your moderated study, you can give each person a metric or two (it’s great to have multiple people measuring one metric but not always possible, of course) to track. That way, the entire test isn’t on you.

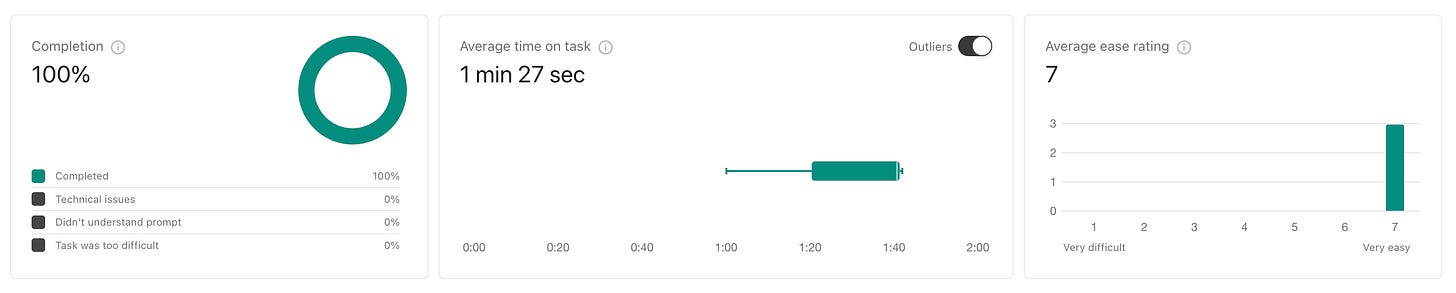

However, if you are running an unmoderated study and using a tool, it usually has predefined metrics that you can choose or automatically measure.

This is hugely helpful, and I do recommend running a few moderated studies to make sure everything is okay and that your tasks are clear and straightforward, and then leaving the rest to run unmoderated whenever possible.

Whenever I have help and am running a moderated test, I like to measure:

Task success

Time on task

Number & type of errors

SEQ

SUS or UMUX

With these metrics, my team and I can get a really comprehensive understanding of the usability of our product/service, which also allows us to continue to track how our improvements change these metrics over time.

The one metric that can get tricky is task success. Task success can either be measured as binary — the participant passed or failed — or it can be measured across a spectrum. For the latter, you and your team have to decide, for each task, what passing versus struggling versus failing means.

Identify the tasks

The next step is about understanding what you want to test. Regarding quantitative usability testing, I recommend picking the most critical tasks a user has to perform to achieve their primary goals.

The best way to do this is to sit with your team and use previous research and usage data to understand the primary goals people have and the top tasks people are performing on your product/service.

Let’s take Booking.com for example. I have never worked at Booking.com, so I don’t know if these are true, but wanted to use an easy example. The top tasks for the website might be:

Searching for a hotel

Filtering for a specific type of hotel

Comparing different hotels

Choosing a hotel room

Viewing the booking details

I often get asked how many tasks to include in a usability test, but it really depends on the session length and the complexity of your tasks. I recommend taking anywhere from 60-90 minutes for a quantitative usability test and, within that time, testing anywhere from 5-15 tasks.

However, of course, if you are using an unmoderated tool, this might differ because you don’t have to take as much time to do an intro, outro, or all the other human things that come with moderated tests. Usually, unmoderated tests take closer to 20-30 minutes, but, again, it still depends on the number of tasks and complexity of your tasks.

I recommend taking no longer than 90 minutes and testing no more than 15 tasks — beyond that, it becomes cognitively overwhelming for both you and the participant. If you happen to have extra time, I recommend having a backlog of deprioritized tasks that you can then test.

Write the tasks

When I first started conducting usability tests, I had some pretty outlandish and funny tasks. No one trained me in task writing, and, as a fiction writer, I have quite an overactive imagination, so I ran wild with usability testing tasks.

I dug deep into the pits of my previous work and had a range of horrendous tasks such as:

Find how to download the brochure.

Book a trip you would love to go on.

Imagine you are booking a holiday and really looking forward to lying on the beach with a piña colada and a great book, listening to the waves crashing. How would you find the perfect holiday?

Imagine that you really want to adopt a dog. What dog would you adopt?

These make me laugh, but we all have to start somewhere. So, how do you write great usability testing tasks?

First, lets define two important concepts:

User goal: This is the end goal for the participant

Task scenario: Describes what the participant will do through relevant details and context.

For example, here is the difference between a user goal and a task scenario:

User goal: Make a doctor’s appointment

Task scenario: Make a doctor’s appointment for October 31st at 10 a.m. with Doctor Anderson

A user goal is the basic goal your user needs to accomplish on your platform (which you ideally listed above), while the task scenario gives relevancy and context to the task that allows a participant actually to do something meaningful on your platform.

One more example:

User goal: Find a restaurant to eat

Task scenario: Find a pizza restaurant in the West Village to book for October 31st at 6 p.m. for two people.

If you just told someone to find a restaurant to eat at, they would likely ask many questions, which is not great for a quantitative usability test because talking during tasks skews your data.

How to write a task scenario

Before we get into a step-by-step on how to write an effective task scenario, let’s first look at the things we should avoid in our task scenarios.

Using words in the interface. If you are trying to get users to click a button that says: “sign up for our newsletter,” try not to tell them to “sign up for our newsletter.” Try to avoid including words in your UI in your task. Using words in your interface makes tasks easier for participants as it leads them to the correct answer.

Creating elaborate scenarios. As you saw above, I have created some elaborate scenarios. While they can be fun to write, they can really break the realistic nature of your test. Keep your scenario realistic, simple, and straightforward.

Offending or triggering the participant. Do your best to steer away from personal information that may be triggering. I once saw a usability test that said, “You’ve really put on the pounds recently and are feeling bad about your weight. How would you find a nutritional guide?” If you can’t avoid these topics, do your best to ensure the task scenarios are neutral.

Using marketing/company jargon. It can be easy for us to slip into company or marketing jargon in our tests, but it’s important to make sure we’re using participant’s language. Don’t ask them to select the “most awesome discount” (biased language).

I recommend, for each user goal/critical task you identified, writing a task scenario. Let’s explore how to construct these wonderful task scenarios.

Start with your user goal/critical task. Take one of the user goals/critical tasks you identified above and start with that.

Include some context. Rather than just giving the user the basic task, you now briefly describe the context the person is in so that they can understand why they are performing the actual task. This gets the participant into a more realistic state of mind.

Give them relevant information. When recording metrics, you don’t want people guessing what type of information they should be inputting. For example, if you just tell someone to book a trip to NYC and don’t give any context, your time on task might be hugely skewed. This is especially important with unmoderated tasks when there is no one who can clarify directions.

Specify the starting point. If there is a particular place you need the participant to start from, make sure that’s very clear in your instructions.

Ensure there is an end the user can reach. Ensuring there is a reachable end is not only satisfactory to the participants but also gives you an indication of whether or not the participant successfully completed the task.

Write your task scenarios. Once you have this information for each goal, it’s time to write your task scenarios.

Let’s look at the example from above on Booking.com. The most critical user goals were:

Searching for a hotel

Filtering for a specific type of hotel

Comparing different hotels

Choosing a hotel room

Viewing the booking details

Here is how I would write task scenarios for this particular test:

User goal: Searching for a hotel

Context: Going on a holiday with your friend to Paris for a four-day trip and looking for a hotel in the city center.

Relevant details: No more than a 30-minute walk to major tourist destinations (Louvre, Notre Dame), from October 28-31st.

Starting point: Booking.com homepage

Endpoint for task scenario: Search results page

Task scenario: Imagine you're planning a four-day trip to Paris with your friend. Using Booking.com to find a hotel in the city center (no more than 3km away from the center) for your stay from October 28th-31st.

User goal: Filtering for a specific type of hotel

Context: You’re looking for more specific hotels

Relevant details: Looking for only 4-star hotels with free Wi-Fi

Starting point: Booking.com search results page

Endpoint for task scenario: Search results page with filters

Task scenario: You and your friend are looking for slightly more specific hotels in Paris. You want to find only 4-star reviews with free WiFi.

If you want another formula to follow, you can use:

Action Verb + Object + Context + Goal + (Optional) Constraints + Endpoint

Let's break down each component:

Action Verb: This is the specific action you want the participant to perform.

Object: What the participant is interacting with (e.g., a button, a menu, a webpage).

Context: The scenario or context in which the action is taking place. This sets the stage for the task.

Goal: The purpose or objective of the task. What do you want the participant to achieve or find?

Constraints: Any limitations or conditions that apply to the task.

Endpoint: Where the participant is meant to end (mostly to ensure there is an endpoint for the task).

If you look at the above tasks from this lens, they would look like:

User goal: Searching for a hotel

Action: Find a hotel

Object: Booking.com search

Context: Four-day trip to Paris with friend, city center, October 28th-31st

Goal: Planning a trip

Constraints: no more than 3km away from the center

Endpoint: search results page

Imagine you're planning a four-day trip (goal) to Paris with your friend. Using Booking.com (object) to find a hotel (action) in the city center, no more than 3km away from the center (constraint), for your stay from October 28th-31st (context).

It might take some time for you to write your tasks, but I promise it will be well worth the effort, especially if you are running a moderated study!

Recruit the participants

When it comes to running quantitative usability tests, we want to make sure we recruit people who make sense for our study. I messed this up once pretty big time. I was doing a usability test for an e-commerce website on purchasing jeans, which included understanding the size and fit.

Well, I managed to recruit some participants who had never bought jeans online, nor did they regularly wear jeans. It was a failure because I couldn’t get them into a realistic scenario, regardless of how amazingly written my task scenarios were.

When you are recruiting for a study, I highly recommend asking yourself the following questions:

What are the particular behaviors I am looking for?

Have they needed to use the product? And in what timeframe?

What goals are essential to our users?

What habits might they have?

With this information, you can list the ideal criteria of your participants and write a screener survey.

By the way, if you are looking for sample sizes for quantitative usability tests, you can find a lot of fantastic information in this article.

Conduct a dry run

I can’t tell you the number of times I have run a usability test and happened upon weirdly worded tasks, unideal data, or confusing components of the task.

Do yourself a huge favor and run a dry run or two with your colleagues to ensure you get all the kinks and bugs out of your script before moving on to your participants. There is nothing worse than wasted sessions!

Run the study

If you are running an unmoderated study, this is pretty straightforward. Depending on the tool you are using, all you have to do is input your tasks and choose whatever measurements that you need or add survey responses (e.g.: SEQ or UMUX) that aren’t already there.

In terms of a moderated study, here is how you can structure the session with the example from Booking.com:

Introduction

Hi there! My name is Nikki, and I am a user researcher at Booking.com. First off, thank you so much for being willing to participate in this session — we really appreciate your time.

For the next 60 minutes, I will ask you to perform five different activities. I will give you all the relevant information you need for the activities, and you can tell me when you’re done with the activity. The purpose of this session is to understand how you interact with our website and identify any areas that may need improvement. Remember, this isn’t a test, so there is no one right way to do anything. Feel free to stop this session at any time if you need to.

Do you mind if I record this session? It is for notetaking purposes and will only be used internally; all of your answers will be kept confidential. Do you have any questions before we begin?

Warm-up questions

When was the last time you went on holiday? What was it like?

What’s your favorite holiday destination and why?

Tasks and surveys

For each task:

Read and send the task scenario: Imagine you're planning a four-day trip to Paris with your friend. Find a hotel in the city center (no more than 3km away from the center) for your stay from October 28th-31st. Go to Booking.com to start.

When the user begins, start the timer and continue the timer until:

The user completes the given task

The user indicates they would give up (failed task)

Count the number of errors, if any, and record them at the end of the task

Record the task success or failure (or struggle)

Ask the user the SEQ:

Overall, how difficult or easy was the task to complete?

1 = very difficult, 7 = very easy

Ask the UMUX or SUS at the end of the tasks.

[This system’s] capabilities meet my requirements.

1 = strongly disagree, 7 = strongly agree

Using [this system] is a frustrating experience.

1 = strongly disagree, 7 = strongly agree

[This system] is easy to use.

1 = strongly disagree, 7 = strongly agree

I have to spend too much time correcting things with [this system].

1 = strongly disagree, 7 = strongly agree

Follow-up questions

Thank you so much for completing those activities; that was very helpful. I just have a few follow-up questions to ask you based on your overall experience:

Talk me through your impressions of the overall experience

Describe the most confusing part of the experience

Explain one thing that was missing from the experience

Walk me through one thing you would change to improve the experience?

Wrap-up

Thank you so much for participating today; we really appreciate your time. This session was extremely helpful for us. We will be analyzing the data from this test and making improvements based on your feedback. This will help us create a better user experience for everyone.

Again, all of your answers will be kept completely confidential. You will receive [compensation and when they will receive in].

Since this session was so valuable, would it be okay for me to contact you again in the future to participate in another research session?

Do you have any other questions for me? If any come up, you can contact me at [email]. Thank you again!

Analyze the results

Depending on if you are using a tool, such as something for unmoderated testing, you might have an easier time analyzing your results, as many of these tools can do it for you.

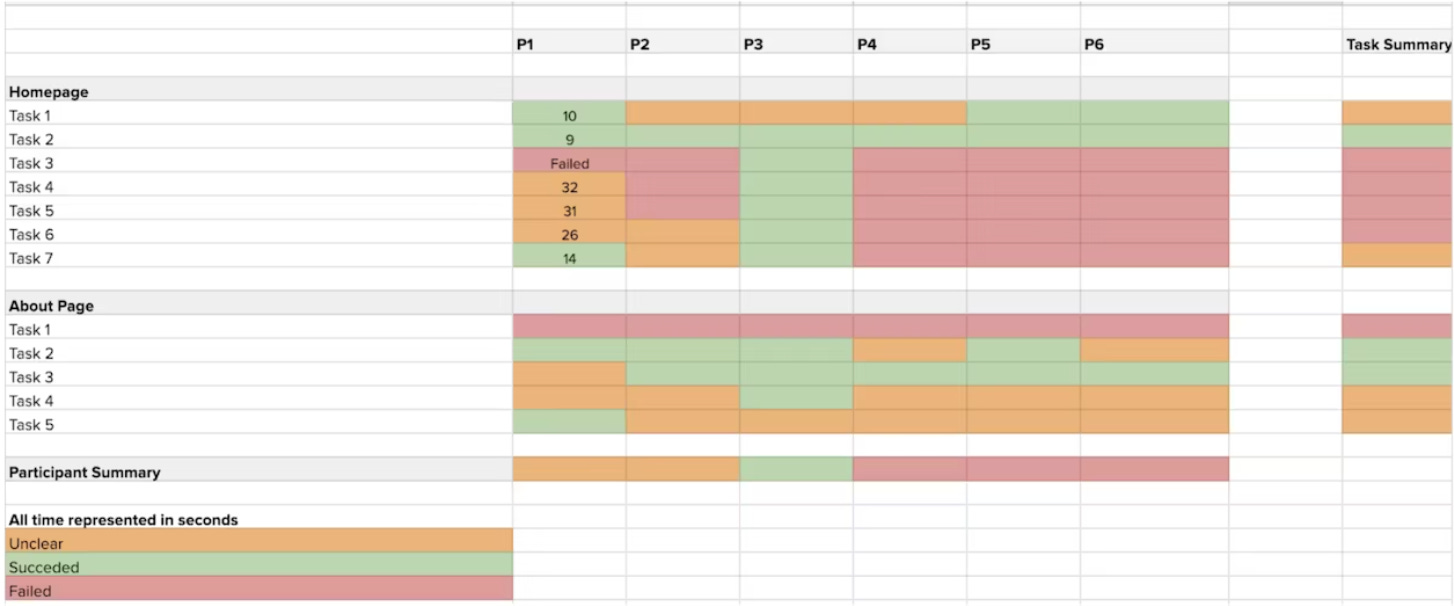

If you are looking for a way to visualize results, especially if you are stuck in the more manual, moderated world, you can use something called a stoplight chart.

A stoplight chart, also known as a red-yellow-green chart, is a visual tool used to analyze and communicate the severity of usability issues identified during a usability test. It categorizes issues into three levels of severity: Critical (red), Important (yellow), and Minor (green).

Here's a step-by-step guide on how to use a stoplight chart to analyze usability test results:

Step 1: Prepare Your Usability Test Results

Gather all the data and observations from your usability test. This includes video recordings, notes, and any metrics you decided to track. Make sure you have sat down with your team to define what task success and failure are (and what a struggle is if you aren’t measuring binary task success), as well as your error types.

Step 2: Create the Chart

You can create a stoplight chart using spreadsheet software like Microsoft Excel or Google Sheets. If you’re having a hard time getting started, check out this template.

Step 3: Assign task completion colors

For each task, assign colors to make it clear what the task success was for each participant and the average per task:

Red = failed task

Orange = struggled with the task

Green = succeeded with the task

Step 4: Populate the Chart

For each participant and task, record the task completion, time on task, and any other metrics you tracked.

This chart just shows the basics of task success and time on task, but add anything else that is necessary for you to report on!

Step 5: Categorize Issues

Review the usability issues you've identified during the usability test, and categorize each issue into issue types.

Critical: These are issues that severely hinder or prevent users from completing essential tasks or achieving their goals. They can result in a high level of frustration or abandonment of the task or system.

Important: These issues are significant but may not be as severe as critical ones. They can cause user frustration or confusion but are not complete showstoppers.

Minor: These are minor issues or suggestions for improvement that don't significantly impact the user experience. They may be cosmetic or inconsequential.

Step 6: Describe Each Issue

You can put this in your chart or have it in a supplemental report. Provide a brief but clear description of each issue you've categorized. Be concise and specific so that anyone reviewing the chart can understand the problem.

Step 7: Prioritize and Plan Action

After creating the stoplight chart, review it to identify patterns and prioritize which issues to address first. Critical issues should be addressed immediately, as they have the most significant impact on usability and user satisfaction. Important issues should also be addressed after, while minor issues can be tackled whenever there is space in the roadmap.

Present

Once you have your stoplight chart, you can go about presenting your findings in a few different ways, depending on the needs of your team.

For many quantitative usability tests, I just sit with my team to review the videos of the task failures and most critical issues. With this, we brainstorm ideas or solutions to fix the issues.

If you need to, and (sometimes it’s necessary!) you can create a usability testing report where you go into more detail with annotations and text.

If you are looking for help on a report, check out this template!

Continuous benchmarking to show the impact

The beauty of quantitative usability testing really comes through when you do it continuously. If we go back to the original question that spurred on my usage of quantitative usability testing — “how do we know these changes are improving the usability?” — we get to continuous benchmarking.

This means that, over a certain amount of time, you are conducting these quantitative usability tests, either over a set period of time (ex: every quarter or six months) or when changes are made.

For each study, you can create a new stoplight report that can visually show you the improvements that you’re making over time. You can also use this information to calculate the types of impact as I mentioned above on important metrics like acquisition, retention, revenue, etc. See more information in this article to see how UXR and these important metrics make sense together.

Join my membership!

If you’re looking for even more content, a space to call home (a private community), and live sessions with me to answer all your deepest questions, check out my membership (you get all this content for free within the membership), as it might be a good fit for you!

Hey Nikki! Great article, as always :) What sample size would you say is required for a quant test and why? Thank you!

Hey, I've noticed you listed "the number of errors" in both, efficiency and effectiveness. Why?